Pipeline Description¶

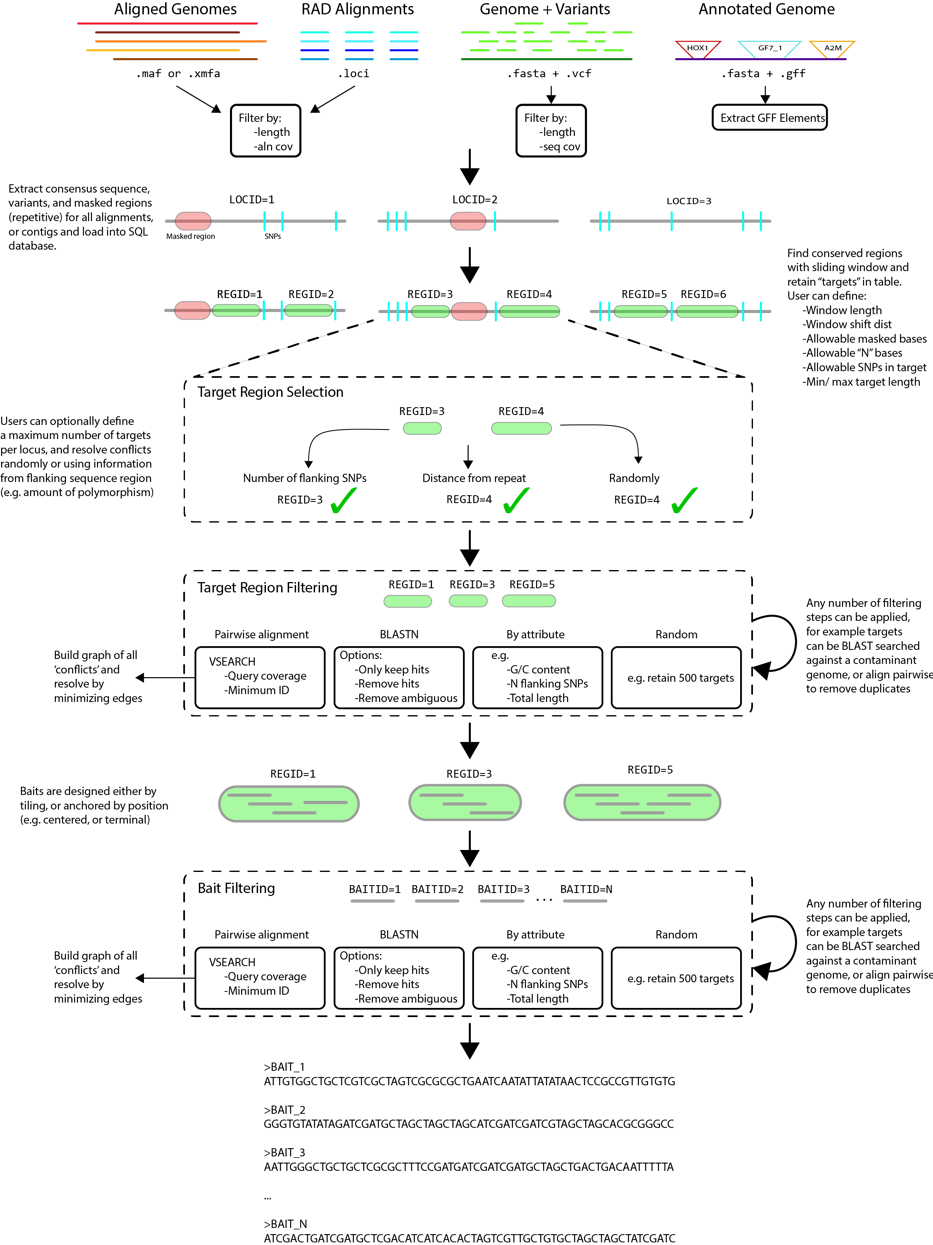

The general process (summarized in figure below) is built on a relational database in SQLite, populated, accessed, and parsed in Python. It takes a variety of input file formats, and is written modularly such that adding additional capabilities (e.g. input file formats, filtering schema) can be done without too much difficulty. The workflow is divided into 5 steps, as follows:

- Alignments (provided as .xmfa, .loci, or .maf) or genomes (provided as .fasta, annotated with .vcf or .gff) will be used to build a consensus sequence of each locus.

- A sliding window will be applied to each consensus to find candidate targets for which baits could be designed

- Targets are then selected (if too close together, or only one allowed per locus), and filtered according to any number of specified filter (e.g. GC content, flanking SNPs, pairwise alignment)

- Passing targets are then parsed to design a putative set of baits

- Baits are then filtered according to selected criteria, and output as FASTA.

- The pipeline can be resumed and any steps iteratively re-visited by providing the SQLite database file (resulting in a significant reduction in runtime for successive runs)